Compression of video signals is used in order either to reduce the bandwidth of the transmission channel (e.g. satellite TV or network) or to reduce the amount of data to be saved (e.g. DVD or Blu-ray). There are two different kinds of compression – lossless and lossy. Lossless compression allows the encoded data to be decoded without any differences to the original. Lossy compression implies that not all the data from the original has to be present in the decoded video in order to achieve good perceptual quality.

A typical simplified design of a lossy video compression algorithm includes following steps:

1. Color transformation and chroma subsampling

Since human vision system is considerably less sensitive for color information than for lightness, it is a common practice to convert RGB data from the camera into a luma channel Y and two color difference channels Cb and Cr. Then, the two chroma channels can be subsampled (e.g. in proportion of 4:2:2), that reduces the amount of data but preserves the perceived quality.

2. Block processing

The image is divided into blocks (e.g. 4x4 or 8x8 pixels). Each of them is then (losslessly) converted into frequency domain using a discrete cosine transformation (DCT) or a discrete wavelet transformation (DWT).

3. Quantization

The values in a block containing the spatial frequency coefficients are quantized by a matrix, that is defined in the encoder, in order to reduce the amount of data. This is the actual lossy part of the encoding.

4. Zig-zag scan and entropy encoding

The quantized coefficients are re-ordered in the way that the low-frequency parts are followed by the high-frequency components. This is often called a zig-zag scan due to the re-ordering pattern. Subsequently, the lossless entropy encoding is applied.

So far, this is nothing more than a kind of the very common JPEG compression. You can read more on this topic in our TechNote "How does the JPEG Compression Work?". However, video data consists of many images (called "frames"). Since the temporal changes in the frame content are mostly not random in a certain range, it is a good idea to apply some compression considering the correlation between the consecutive frames. This way of encoding video data is called "interframe compression" in contrast to the "intraframe compression" as discussed above.

There are three main compressed frame types, although, for example, in H.264/MPEG-4 AVC it is possible to define these types not only for a whole frame but also for its parts, called "slices".

|

I-Frame |

P-Frame |

B-Frame |

| This is an intra-coded frame that, similar to a JPEG image, does not need any other frames from the video stream in order to be decoded. | This is a predicted frame that contains only the changes from the preceeding frame. Since P- and B-Frames only contain a part of the image information, they improve the compression rate reducing the data amount to be saved or transmitted. | Similar to the predicted frames, bidirectionally predicted frames contain the information changes to the previous and the following frames, saving even more bandwidth or storage space. |

| I | B | B | P | B | B | P | B | B | P | B | B | I | B | B | P | B | B | P | B | B | P | B | B | I | B | B | P | B | B | P | B | B | P | B | B |

Single I-Frame Group of Pictures (GOP)

I-Frames are required for the random access, so that a decoder can begin decoding from this frame on. Furthermore, they can be generated by an encoder if the video content has changed and a reasonable generation of P- or B- frames is not possible. P- and B- Frames both need the prior decoding of some frames they are referenced to. They can either contain image data or motion vectors, describing how the image content has changed referring to an I-Frame for example.

Using FFMpeg you can simply extract single frames from a video file. For example, following line will save the I-Frames starting from the fifth second as uncompressed TIFF files. This will be done for ten seconds and the TIFF files will be named image_0001.tiff and so on.

ffmpeg -i video.avi -intra -ss 00:00:05 -t 00:00:10 image _%05d.tif

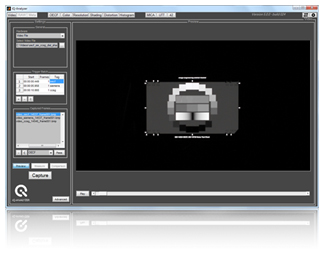

In order to analyze the image quality of these extracted frames, a much more convenient method is to use the Video Module of our iQ-Analyzer, allowing you to extract frames in a batch and analyze them within any other module of the software in a comfortable way.

In order to analyze the image quality of these extracted frames, a much more convenient method is to use the Video Module of our iQ-Analyzer, allowing you to extract frames in a batch and analyze them within any other module of the software in a comfortable way.

bg